Creating a Postgres docker container and connecting dbt

Table of Contents

Introduction

I am currently researching dbt, a data transformation tool that allows you to transform data in your warehouse more effectively. One of the things that is really interesting about it, is that dbt has a set of adapters that allow you to interchange between different database engines.

It is also really easy to get started with local development. I initially tried to get the SQL Server adapter up and running because I am able to run SQL Server locally on my Mac. However, the SQL Server adapter is a community adapter and it’s not so quick to get started, so for now I’m going to work with Postgres.

I’ve chosen to use the Postgres adaptor because I can spin up a Postgres database locally in a Docker container in a few minutes. This means I can do dedicated development offline without having to sign up for any cloud services. Here’s how I set things up.

Pre-requisites

Before you can complete the steps below, you need to install and configure Docker from Docker docs.

Create a container instance

The command I’m going to run to create a Postgres container is:

# make sure that Docker is running when you run this command

Docker run -d --name dbt-quickstart -p 5432:5432 -e POSTGRES_USER=justin -e POSTGRES_PASSWORD=justin postgres:15-alpine

The parameters are as follows:

--name dbt-quickstartis the name of the container.-p 5432:5432maps the port 5432 on the host to the port 5432 in the container.-e POSTGRES_USER=justinis the user name.-e POSTGRES_PASSWORD=justinis the password (look away security fans).postgres:15-alpineis the image that will be used to create the container.

If you haven’t had a Postgres container up and running before, then Docker will download the image, then create the container.

Unable to find image 'postgres:15-alpine' locally

15-alpine: Pulling from library/postgres

bca4290a9639: Pull complete

73755f5e6a3e: Pull complete

3a60b4a71875: Pull complete

56089f613fb2: Pull complete

3972f5d4acdc: Pull complete

edbb275221bf: Pull complete

fe375eefc236: Pull complete

f8ac95c0dcf9: Pull complete

f89251e2b9f9: Pull complete

Digest: sha256:8a8d55343d6fc456cb183453e3094ff140b984157b36b48f817dd581654f2aec

Status: Downloaded newer image for postgres:15-alpine

8b122e2d8b752c8ef7a32094a897c826193415151bcf0fa9371edafa46e3196a

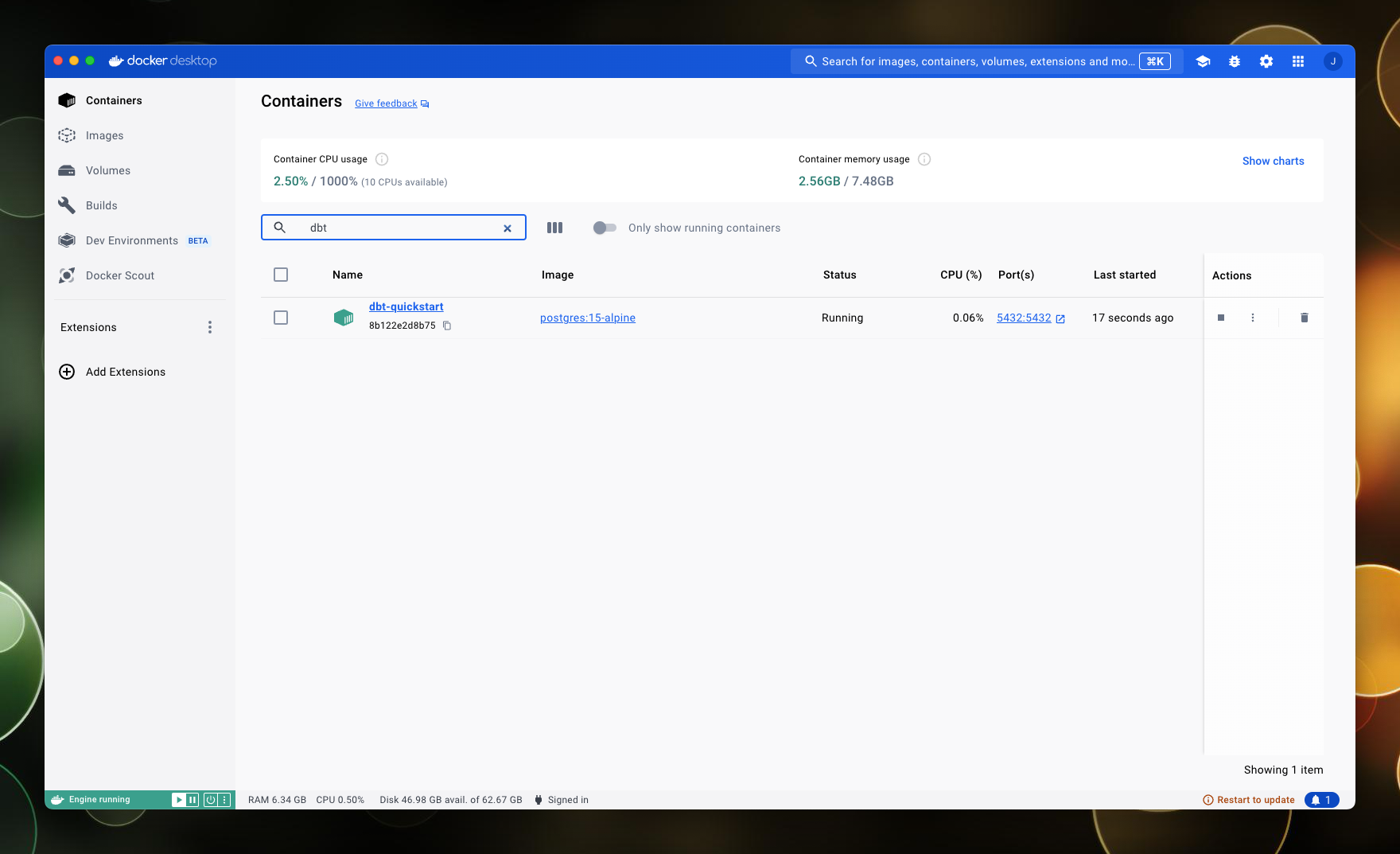

You can check that the container is running by running Docker ps or by reviewing the Docker dashboard.

Install and configure dbt-sqlserver

Next I need to install the dbt adapter for postgres:

# installs dbt adapter

pip install dbt-sqlserver

Run dbt

I’m using the jaffle-shop sandbox project to review dbt. All I need to do from here is set up my profile in the profiles.yml file. I want this project to be built within my new postgres database, and so the profiles.yml file will look like this:

quickstart-postgres:

target: dev

outputs:

dev:

type: postgres

host: localhost

user: justin

password: justin

port: 5432

dbname: jaffle

schema: dbo

By updating the profile in the dbt_project.yml file to quickstart-postgres I can now run dbt commands to build and test the project against my Postgres database.

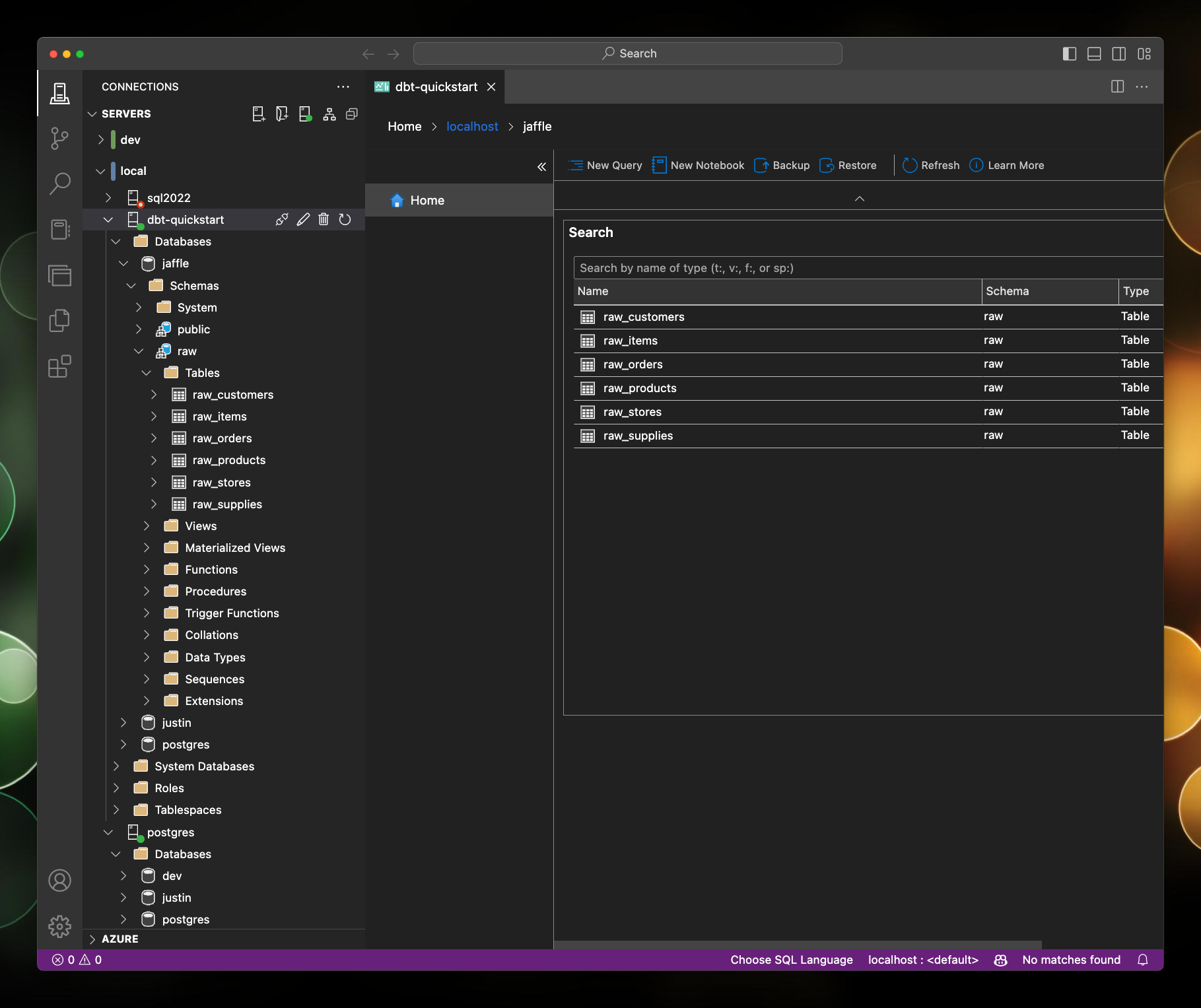

Accessing the database in Azure Data Studio

I can also connect to the database using Azure Data Studio. I can see the tables that have been created by dbt as a result of running the dbt seed command so I know that everything is working.

And so from there I can run dbt run to build the project:

dbt run

# output

Running with dbt=1.7.14

Registered adapter: postgres=1.7.14

Found 13 models, 6 seeds, 6 sources, 0 exposures, 19 metrics, 651 macros, 0 groups, 6 semantic models

Concurrency: 1 threads (target='dev')

1 of 13 START sql table model dbo.metricflow_time_spine ........................ [RUN]

1 of 13 OK created sql table model dbo.metricflow_time_spine ................... [SELECT 3651 in 0.14s]

2 of 13 START sql view model dbo.stg_customers ................................. [RUN]

2 of 13 OK created sql view model dbo.stg_customers ............................ [CREATE VIEW in 0.06s]

3 of 13 START sql view model dbo.stg_locations ................................. [RUN]

3 of 13 OK created sql view model dbo.stg_locations ............................ [CREATE VIEW in 0.05s]

4 of 13 START sql view model dbo.stg_order_items ............................... [RUN]

4 of 13 OK created sql view model dbo.stg_order_items .......................... [CREATE VIEW in 0.04s]

5 of 13 START sql view model dbo.stg_orders .................................... [RUN]

5 of 13 OK created sql view model dbo.stg_orders ............................... [CREATE VIEW in 0.05s]

6 of 13 START sql view model dbo.stg_products .................................. [RUN]

6 of 13 OK created sql view model dbo.stg_products ............................. [CREATE VIEW in 0.05s]

7 of 13 START sql view model dbo.stg_supplies .................................. [RUN]

7 of 13 OK created sql view model dbo.stg_supplies ............................. [CREATE VIEW in 0.05s]

8 of 13 START sql table model dbo.locations .................................... [RUN]

8 of 13 OK created sql table model dbo.locations ............................... [SELECT 6 in 0.04s]

9 of 13 START sql table model dbo.products ..................................... [RUN]

9 of 13 OK created sql table model dbo.products ................................ [SELECT 10 in 0.04s]

10 of 13 START sql table model dbo.order_items ................................. [RUN]

10 of 13 OK created sql table model dbo.order_items ............................ [SELECT 90900 in 0.15s]

11 of 13 START sql table model dbo.supplies .................................... [RUN]

11 of 13 OK created sql table model dbo.supplies ............................... [SELECT 65 in 0.03s]

12 of 13 START sql table model dbo.orders ...................................... [RUN]

12 of 13 OK created sql table model dbo.orders ................................. [SELECT 61948 in 0.33s]

13 of 13 START sql table model dbo.customers ................................... [RUN]

13 of 13 OK created sql table model dbo.customers .............................. [SELECT 935 in 0.09s]

Finished running 7 table models, 6 view models in 0 hours 0 minutes and 1.31 seconds (1.31s).

Completed successfully

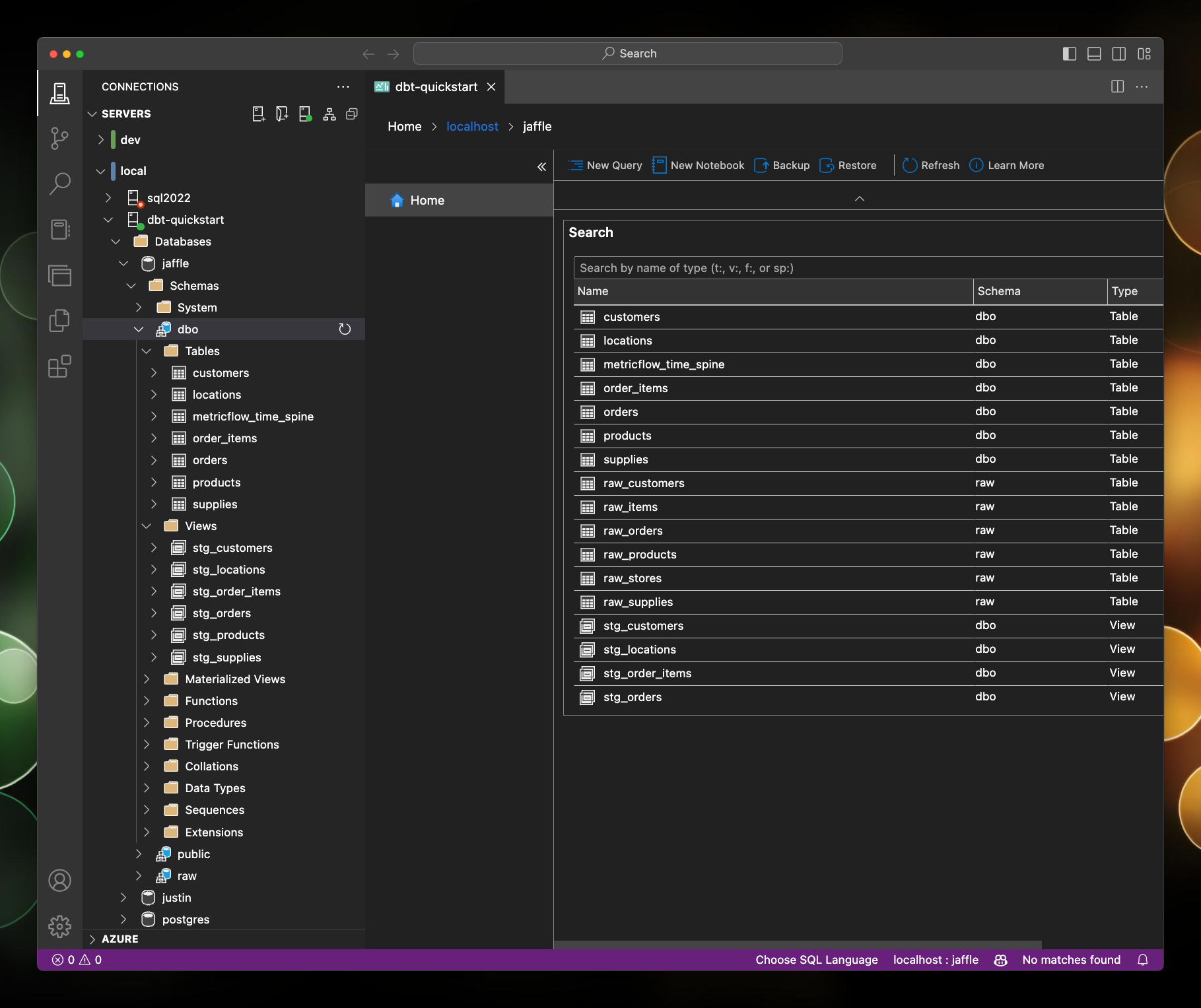

Which will build the project in the Postgres database:

Conclusion

If you want to explore dbt and you don’t want to go through the hassle of setting up a cloud database rource, then this is a pretty quick way to get started. I do like the idea of being able to develop and test the entire solution offline. There is also the option to connect to DuckDB, but I haven’t tried that yet.

References

#mtfbwy